Summary Project description

|

| CADDY concept |

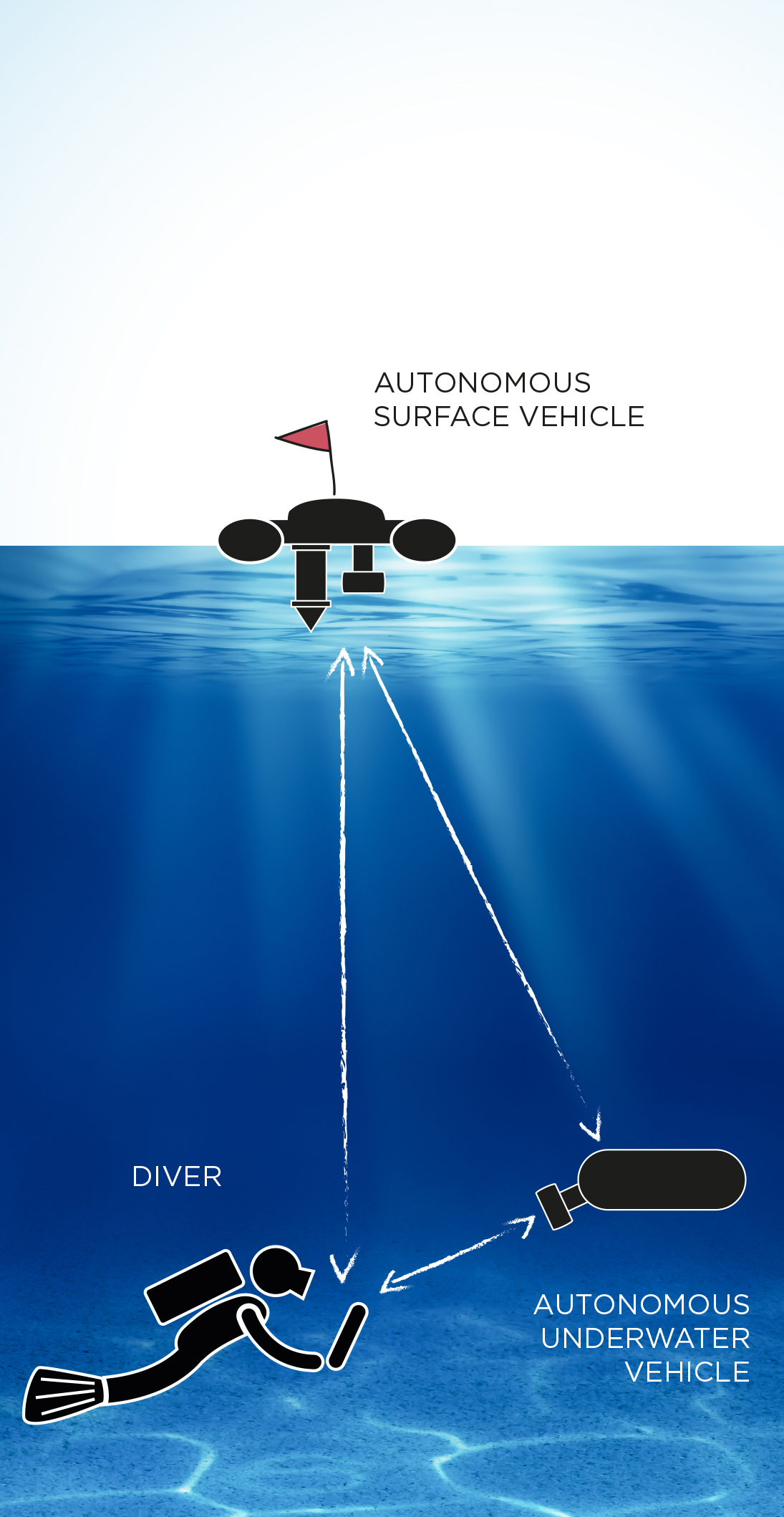

Divers operate in harsh and poorly monitored environments in which the slightest unexpected disturbance, technical malfunction, or lack of attention can have catastrophic consequences. They manoeuvre in complex 3D environments and carry cumbersome equipment performing their missions. To overcome these problems, CADDY aims to establish an innovative set-up between a diver and companion autonomous robots (underwater and surface) that exhibit cognitive behaviour through learning, interpreting, and adapting to the diver’s behaviour, physical state, and actions.

The CADDY project replaces a human buddy diver with an autonomous underwater vehicle and adds a new autonomous surface vehicle to improve monitoring, assistance, and safety of the diver’s mission. The resulting system plays a threefold role similar to those that a human buddy diver should have: i) the buddy “observer” that continuously monitors the diver; ii) the buddy “slave” that is the diver's “extended hand” during underwater operations, performing tasks such as “do a mosaic of that area”, “take a photo of that” or “illuminate that”; and iii) the buddy “guide” that leads the diver through the underwater environment.

The envisioned threefold functionality will be realized through S&T objectives which are to be achieved within three core research themes: the “Seeing the Diver” research theme focuses on 3D reconstruction of the diver model (pose estimation and recognition of hand gestures) through remote and local sensing technologies, thus enabling behaviour interpretation; the “Understanding the Diver” theme focuses on adaptive interpretation of the model and physiological measurements of the diver in order to determine the state of the diver; while the “Diver-Robot Cooperation and Control” theme is the link that enables diver interaction with underwater vehicles with rich sensory-motor skills, focusing on cooperative control and optimal formation keeping with the diver, as an integral part of the formation.

The summary of main results obtained within the CADDY defined objectives is given in the following part.

TO1. Development of a cooperative multi-component system capable of interacting with a diver in unpredictable situations and supporting cognitive reactivity to the non-deterministic actions in the underwater environment.

- BUDDY AUV was developed as a primary underwater vehicle custom made for interaction with divers. BUDDY is mounted with an underwater tablet as a means of interaction with the diver. In addition it is equipped with a stereo camera, mono camera, multibeam sonar, all used for "seeing the diver".

- MEDUSA ASV was modified to serve as a primary surface vehicle in the CADDY scenario

- The backup vehicles are e-URoPe (a hybrid between AUV and an ROV) and PlaDyPos USV

- diver application was developed for commercially available Android tablets, placed in a custom made underwater housing, for the purpose of interfacing the diver with the robotic vehicles

TO2. Establishing a robust and flexible underwater sensing network with reliable data distribution, and sensors capable of estimating the diver pose and hand gestures.

TO2.a. Testing and evaluation of sensors that will enable pose estimation and hand gesture identification in the underwater environment.

- High resolution multibeam sonar can detect diver hands but it is mainly used to detect the diver at larger distances (>3 meters) and his global pose (direction).

- Stereo camera in combination with monocular camera has been used to determine both diver pose and hand gestures

- A stereo camera housing with flat tempered glass has been custom built to reduce image distortions caused by the common cylindrical housing

- Instead of calibrating underwater directly, a technique was developed to estimate the underwater calibration parameters based on the much easier and well understood in-air calibration and basic measurements of the underwater housing used.

- An innovative body network of inertial sensors called DiverNet has been designed and manufactured in order to reconstruct the pose of the diver

- Wireless version enables acoustic transmission of data processed on the diver tablet to the surface using acoustic modems to the surface.

- Heart rate and breathing sensor have been integrated with the DiverNet, allowing recording of heart rate, breathing data and motion data during the experiments

TO2.b. Propagation of the acquired data through the network to each agent with strong emphasis on securing reliable data transmission to the command centre for the purpose of automatic report generation and timely reporting in hazardous situations.

- a new generation of small scale USBL and acoustic modems has been developed

- new calibration procedure for the developed USBLs has been developed, resulting in significantly improved accuracies in azimuth (< 5deg) and elevation errors (< 4deg)

- Software package was designed to allow live mission motoring and mission replay

- the developed acoustic communication scheme enables the transmission of data used for diver monitoring and alerting, as well as vehicle telemetry

- Diver State Monitor shows the supervisor in real time diver status using several critical values like: diver depth, average flipper rate, heart rate, breathing rate, motion rate, PAD space, diver state alarms, predefined chat

- Chat GUI enables communication using a predefined set of messages such as “Are you OK?”, “OK”, “Repeat instruction”, “Dive out”, “Normal operation”, “Breathe faster”, “Issue alarm”, etc.

- The diver tablet itself carries out the gathering and pre-processing of the DiverNet sensor data: Heart rate calculated via digital sensor on the diver’s torso; Breathing rate calculated from the pressure sensor included in the DiverNet; Paddling rates calculated from the accelerometer measurements of the DiverNet nodes placed on the diver’s feet; Diver motion rate is calculated from the DiverNet nodes placed on the hands, feet, and head of the diver

- Mission replay software collects dana from all vehicles and diver tablet, in order to visualize them for the purpose of better presentation to the user

TO2.c. Adaptive learning mechanism for communications scheduling based on the detection of bubble streams produced by the diver.

- reliability investigations have been carried out into how transmissions may be time synchronised with the diver’s breathing pattern in order to minimise the effects of noise from the breathing apparatus

SO1. Achieve full understanding of diver behaviour through interpretation of both conscious (symbolic hand gestures) and unconscious (pose, physiological indicators) nonverbal communication cues.

SO1.a. Develop efficient and near real-time algorithms for diver pose estimation and gesture recognition based on acoustic and visual conceptualization data obtained in a dynamic and unstructured underwater environment.

- algorithm was developed for hand gesture recognition using stereo camera imagery based on feature aggregation algorithm developed to cope with the highly variant underwater imagery

- algorithms for hand detection using multibeam sonar imagery were developed to detect the number of extended diver fingers within sonar image

- algorithms for diver pose recognition and localization from mono and stereo imagery was used onboard BUDDY in order to determine diver position and orientation relative to the BUDDY AUV

- algorithms for diver pose and localization recognition using the sonar imagery were augmented with the diver motion tracking algorithm to ensure precise positioning relative to the diver

- A database of stereo images and point clouds for both the hand gesture recognition module and the diver pose estimation was made available online at http://robotics.jacobs-university.de/datasets/2017-underwater-stereo-dataset-v01/.

SO1.b. Develop adaptive algorithms for interpretation of diver behaviour based on nonverbal communication cues (diver posture and motion) and physiological measurements.

- Artificial neural networks were trained using the DiverNet data do detect different diver activities such as standing, sitting, T-pose, etc. Accuracy of around 90% was achieved allowing to transmit only this high-level diver activity data to the surface.

- A multilayer perceptron was trained to predict pleasure, arousal and control using breath rate, heart rate and motion rate measurements. This approach proved fairly successful with classification rates reaching from 60 % (dominance/control high) to only 18% (pleasure, neutral). Overall classification score was 40 %.

- Software DiverControlCenter has been developed for the purpose of analysing different diver measurements and predicting diver’s emotional states.

SO2. Define and implement execution of cognitive guidance and control algorithms through cooperative formations and manoeuvres in order to ensure diver monitoring, uninterrupted mission progress, execution of compliant cognitive actions, and human-machine interaction.

SO2.a. Develop and implement cooperative control and formation keeping algorithms with a diver as a part of the formation.

- Diver-BUDDY cooperative controller was developed in order to ensure that BUDDY is positioned in front of the diver regardless of the diver’s orientation. For this purpose, the diver motion estimator was used together with the measurements obtained from stereo cameras, sonar and USBL.

- large number of structured experiments, consisting of the “approach” phase, “rotation” experiment, and “translation” experiment, were conducted in order to ensure repeatability and analyse different sources of uncertainties.

- experiments with virtual and real divers have demonstrated very satisfactory results of the developed cooperative control system

- Diver-BUDDY-USV formation control was developed in order to ensure the surface vehicle is in an area close-by in order to improve, among other things, the acoustic communications with the underwater agents, while avoiding being on top of them, for safety purposes. The implemented solution is based on the artificial potential field method.

- large number of experiments have shown satisfying performance in formation keeping, with maximum errors of 1.1 meters.

SO2.b. Develop cooperative navigation techniques based on distributed measurements propagated through acoustically delayed sensing network.

- a navigation filter using DVL and delayed USBL measurements fused via an extended Kalman filter was developed for the purpose of providing a smooth navigation for all the three agents (surface, underwater and diver)

- an algorithm for maximizing observability of the navigation system by using extremum seeking has been developed and tested.

- The mobile beacon, used to track the underwater target, steers in such a way to reduce the cost function which gives a measure of observability.

- the advantages are: this is a model-free approach, constant disturbances are automatically compensated, and there is no need of a priori knowledge of the tracked vehicle trajectory

- a single beacon navigation system was implemented for the purpose of navigation using only ranging devices, without angle measurements, using the maximization of the Fisher information matrix. The developed and integrated system consists of an estimator, planner and tracking modules

- conducted trials have shown good results with the surface vehicle asymptotically tracking the underwater target

SO2.c. Execution of compliant BUDDY tasks initiated by hand gestures.

- the aim of providing a compliant behaviour of the overall robotic system, with respect to the command issued by the diver, is made available through the development of an automatic selection system for the execution of the proper autonomous robotic tasks.

SO3. Develop a cognitive mission (re)planner which functions based on interpreted diver gestures that make more complex words.

SO3.a. Develop an interpreter of a symbolic language consisting of common diver hand symbols and a specific set of gestures.

- language of communication between the diver and the robot based on gestures, called CADDIAN, has been developed – a total of 52 symbols have been defined, together with the „slang“ group of symbols for better acceptance by the diving community

- A fault tolerant symbolic language interpreter was developed, allowing robust interpretation of the commands issued by the diver to BUDDY. The developed software comprises a Diver gesture classifier, Phrase Parser, Syntax checker, Command dispatcher, and Tablet Feedback.

SO3.b. Development of an online cognitive mission replanner.

- Modular framework of the mission controller was designed and developed with the aim of managing the state tracking, task activations and reference generation that fulfils the requirements in order to support the diver operations. The framework is based on Petri Nets enhanced with mutual exclusive and conflict-free mission control.

The central point of year 2 of the project were 1st validation trials that took place in Biograd na Moru, Croatia in October 2015. The research results that were demonstrated focused on five experiments that were successfully executed. Validation procedure was defined for each experiment.

In the third year of the project, focus was placed on preparing all the required algorithms for the final validation trials. A software integration week was organized in May in Zagreb during which simulations were carried out along with real diver data acquisition that proved out to be really useful to integrate software developed by different partners and to take notes on what needs further development. Final validation trials took place in October 2016 in Biograd na Moru, Croatia where all the developed algorithms were evaluated by end-user divers.

More on CADDY project progress can be found on the website http://caddy-fp7.eu/, and live reports from experiments are available on our Facebook page https://www.facebook.com/caddyproject.